How to boot the Samsung Galaxy S7 modem with plain Linux kernel interfaces only

Thu 10 Sep 2020 15:43 CEST

Part of a series: #squeak-phone

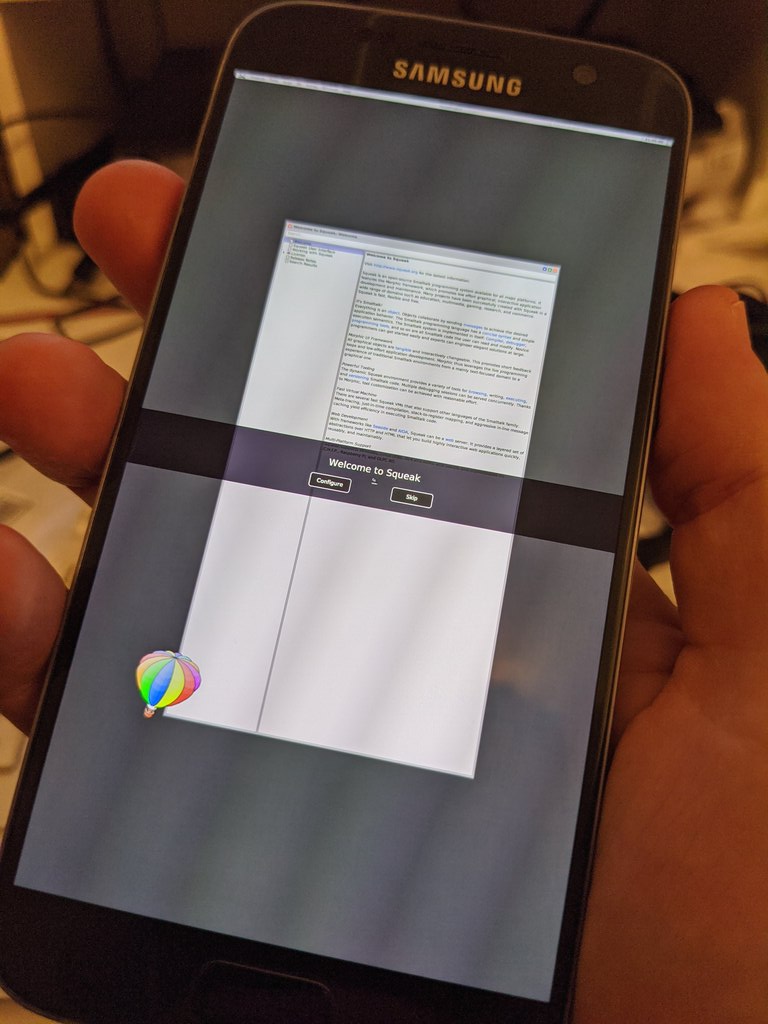

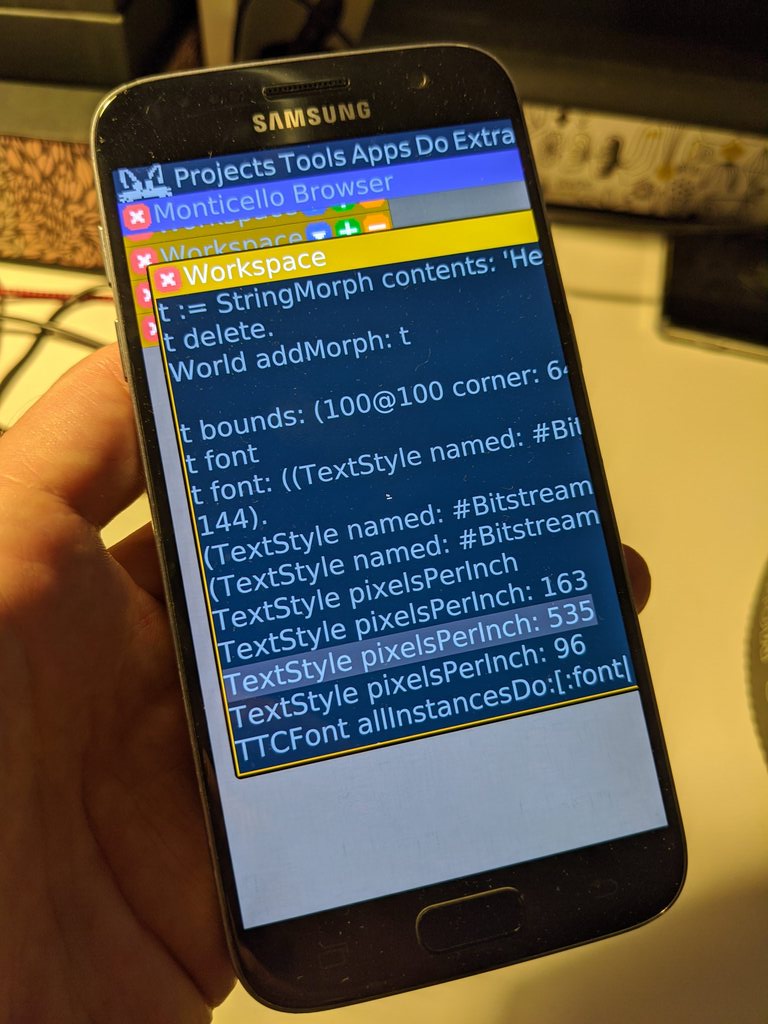

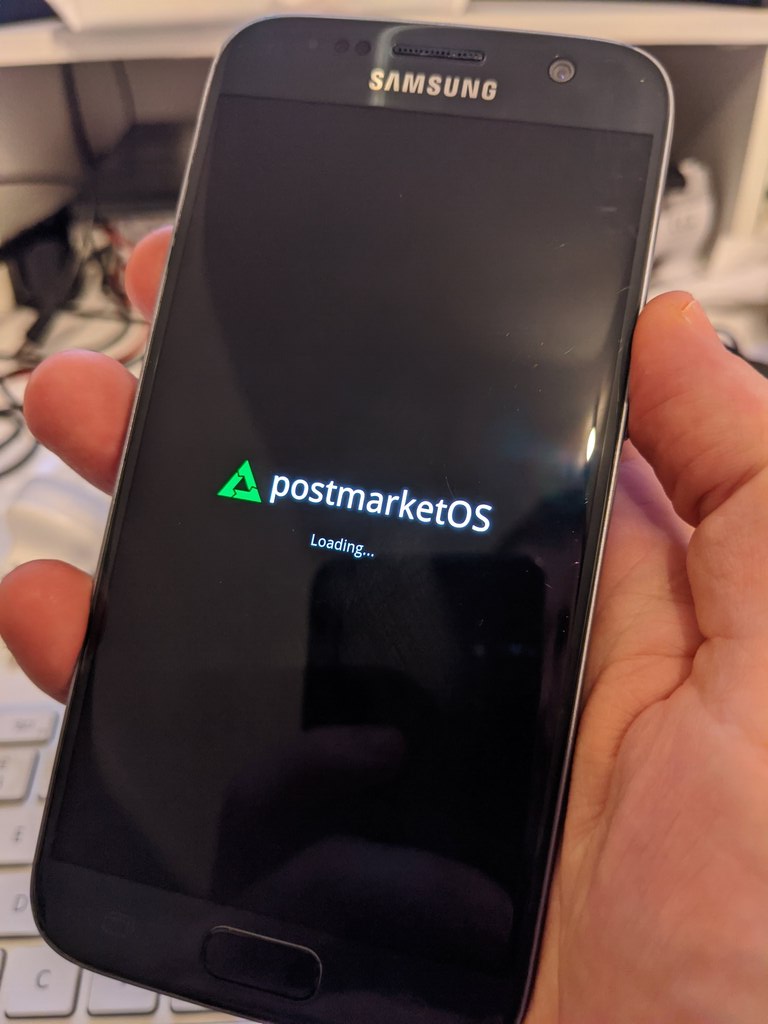

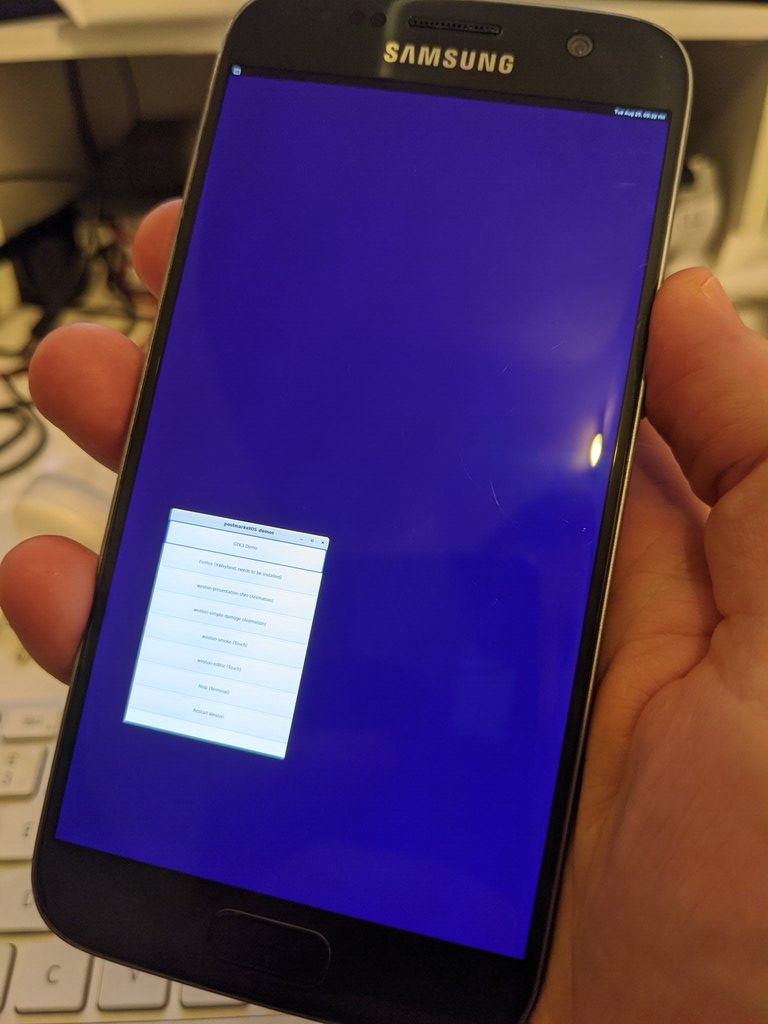

I’ve been running PostmarketOS on my Samsung Galaxy S7 recently. As far as I can tell, none of the available modem/telephony stacks supports the Galaxy S7 modem.

I was expecting to be able to just open /dev/ttyACM0 or similar and

speak AT commands

to it, like I used to be able to on Openmoko.

However, Samsung phones have a proprietary, undocumented1, unstandardized binary interface to their modems. Operating a modem is the same across members of the Samsung family, but each different handset seems to have a different procedure for booting the thing.

So I decided to reverse engineer (a fancy name for “running strace

on cbd and rild and reading a lot of kernel source code”) the

protocol.

The result is a couple of quick-and-dirty python scripts which, together, boot the modem and print out the messages it sends us. It’d be straightforward to extend it to, for example, send SMS, or to manage incoming and outgoing calls.

How Android (LineageOS) does it

LineageOS uses Samsung’s proprietary cbd and rild programs,

extracted as binary blobs from the stock firmware.

The rild program implements the

Android Radio Interface Layer (RIL),

talking directly to the modem over /dev/umts_ipc0.

The cbd program performs the modem boot and reset sequences, and is

started after rild is running by Android’s init. It talks to the

modem over /dev/umts_boot0.

The cbd program relies on the availability of the Android RADIO

partition, which contains the modem firmware, as well as on the

existence of a file nv_data.bin stored on the phone’s EFS

partition.

My S7, running LineageOS, started its cbd with the following

command-line:

/sbin/cbd -d -tss310 -bm -mm -P platform/155a0000.ufs/by-name/RADIOHere’s the “help” output of cbd, that we can use to decode this:

CP Boot Daemon

Usage: ./cbd [OPTION]

-h Usage

-d Daemon mode

-t modem Type [cmc22x, xmm626x, etc.]

-b Boot link [d, h, s, u, p, c, m, l]

d(DPRAM) h(HSIC) s(SPI) u(UART) p(PLD) c(C2C) m(shared Memory) l(LLI)

-m Main link [d, h, s, p, c, m, l]

d(DPRAM) h(HSIC) s(SPI) p(PLD) c(C2C) m(shared Memory) l(LLI)

-o Options [u, t, r]

u(Upload test) t(Tegra EHCI) r(run with root)

-p Partition# of CP binary

The command line, then, tells us a few interesting things:

- the modem is an “SS310”.

- it uses shared memory (corresponding to

drivers/misc/modem_v1/link_device_shmem.cin the kernel source code) to communicate - the modem firmware partition name is

RADIO - there’s an undocumented flag,

-P(cf. the documented-p) which lets you supply a partition path fragment rather than a partition number

That last is crucial for running the same binary on PostmarketOS,

which has a different layout of the files in /dev.

How libsamsung-ipc does it

The open-source libsamsung-ipc from the Replicant project handles the boot and communication processes for a number of (older?) Samsung handsets, but not the Galaxy S7. Once a modem is booted, libsamsung-ipc passes higher-level protocol messages back and forth between the modem and libsamsung-ril, which layers Android telephony support atop the device-independent abstraction that libsamsung-ipc provides.

Generally, modems are booted by a combination of ioctl calls and

uploads of firmware blobs, with specific start addresses and blob

layouts varying per modem type.

At first, I was concerned that I wouldn’t have enough information to

figure out the necessary constants for the S7 modem. However, luckily

just strace combined with dmesg output and kernel source code was

enough to get it working.

Booting the modem - qnd_cbd.py

The overall sequence is similar to, but not quite the same as, other Samsung models already supported by libsamsung-ipc.

Running strace on Samsung’s cbd yields the following steps.

Extract the firmware blobs. The firmware partition has a table-of-contents that has a known structure. From here, we can read out the chunks of data we will need to upload to the modem.2

Acquire a wake-lock. I don’t understand the Android wake-lock

system, but during modem boot, cbd acquires the ss310 wakelock.

Open /dev/umts_boot0. This character device is the focus of most

of the subsequent activity. I’ll call the resulting file descriptor

boot0_fd below.

Issue a modem reset ioctl. Send IOCTL_MODEM_RESET (0x6f21) to

boot0_fd.

Issue a “security request”. Whatever that is! Send

IOCTL_SECURITY_REQ (0x6f53) with mode=2, size_boot=0 and size_main=0

(like this).

According to cbd’s diagnostics3, this is asking

for “insecure” mode; the same ioctl will be used later to enter a

“secure” mode.

One interesting thing about this particular call to

IOCTL_SECURITY_REQ is that it answers error status 11 if you run it

as root. The Samsung cbd does a prctl(PR_SET_KEEPCAPS,

1)/setuid(1001) just before IOCTL_SECURITY_REQ, which appears

to give a happier result of 0 from the ioctl. However, if you ask

cbd to stay as root by supplying the command-line flag -or to it,

then it too gets error status 11 from the ioctl. Fortunately, the

error code seems to be ignorable and the modem seems to boot

successfully despite it! This makes me think that perhaps running

(UPDATE. Here’s what I think is going on. Setting mode=2

apparently asks for “insecure” mode, which allows uploading of

firmware chunks. If we omit the mode=2 call to IOCTL_SECURITY_REQ at this point, in this way, is optional.IOCTL_SECURITY_REQ,

the phone reboots if it has previously successfully booted the modem.

Later, mode=0 requests “secure” mode, which is what causes the phone

to reboot unless mode=2 is selected. So ultimately

IOCTL_SECURITY_REQ with mode=2 is mandatory, because otherwise

you’ll end up crashing hard each time you restart the modem daemon.)

Upload three binary blob chunks. In order, send the BOOT and

MAIN blobs from the firmware table-of-contents, followed by the

contents of the nv_data.bin file on the EFS partition.

Sending a blob is a slightly involved process. Repeat the following steps until you run out of blob to upload:

-

Read the next chunk into memory. The stock

cbdconfines itself to chunks of 62k (yes, 62k, not 64k) or smaller. The kernel doesn’t appear to care, but why mess with success? -

Prepare a

struct modem_firmwaredescriptor, pointing to the chunk.-

The

binaryfield should be the address in RAM of the start of the chunk you just read. -

The

sizefield is the total size of the blob being uploaded. -

The

m_offsetfield is an offset into the chunk of RAM reserved on the kernel side for uploaded blobs. TheBOOTblob goes in at offset 0, so itsload_addrfield from the firmware partition’s table-of-contents, which for me was 0x40000000, corresponds tom_offset0.As another example, for the first 62k chunk of the

MAINblob, which hasload_addr0x40010000,m_offsetshould be 0x10000, sinceMAIN’sload_addris 0x10000 greater thanBOOT’sload_addr. For the second 62k chunk,m_offsetshould be 0x1f800, and so on. -

The

b_offsetfield isn’t currently used by the kernel, but I suspect thatcbdfills it in anyway, so I do too: I set it to the offset within the firmware partition of the beginning of the chunk being uploaded. -

The

modefield is interpreted by the kernel simply on a zero/nonzero basis, despite some hints elsewhere that valid values are 0, 1 and 2. Set mode=0 for all the uploaded chunks, since this is whatcbddoes. -

Finally, the

lenfield is the length of the chunk to be uploaded.

-

-

Issue an ioctl

IOCTL_MODEM_XMIT_BOOTto boot0_fd, with argument a pointer to themodem_firmwaredescriptor you just filled in.

Note well that the chunks are to be read out of the firmware

partition, following the appropriate TOC entries, for the BOOT and

MAIN blobs, but chunks are to be read from nv_data.bin on the

EFS partition, and not anywhere in the RADIO partition, for the

NV blob.

Issue a second “security request”. This time, send

IOCTL_SECURITY_REQ (0x6f53) with mode=0, size_boot set to the size

of the BOOT blob from the TOC, and size_main set to the size of the

MAIN blob. For me, those values were 9572 and 40027244,

respectively. According to cbd’s diagnostics, this is asking for

“secure” mode.

Tell the modem to power on. This interacts with power management

code on the kernel side somehow. Issue ioctl IOCTL_MODEM_ON (0x6f19)

to boot0_fd.

Tell the modem to start its boot sequence. Issue

IOCTL_MODEM_BOOT_ON (0x6f22) to boot0_fd.

Tell the kernel to forward the firmware blobs to the modem. Issue

IOCTL_MODEM_DL_START (0x6f28) to boot0_fd.

At this point, cbd engages in a little dance with the newly-booted

modem, apparently to verify that it is running as expected. I don’t

know the sources of these magic numbers, I just faithfully reproduce

them:

- Write

0D 90 00 00to boot0_fd. - Read back four bytes. Expect them to be

0D A0 00 00. - Write

00 9F 00 00to boot0_fd. - Read back four bytes. Expect them to be

00 AF 00 00.

Tell the modem the boot sequence is complete. Issue

IOCTL_MODEM_BOOT_OFF (0x6f23) to boot0_fd.

Close boot0_fd, and release the wake-lock. At this point the modem is booted! Congratulations!

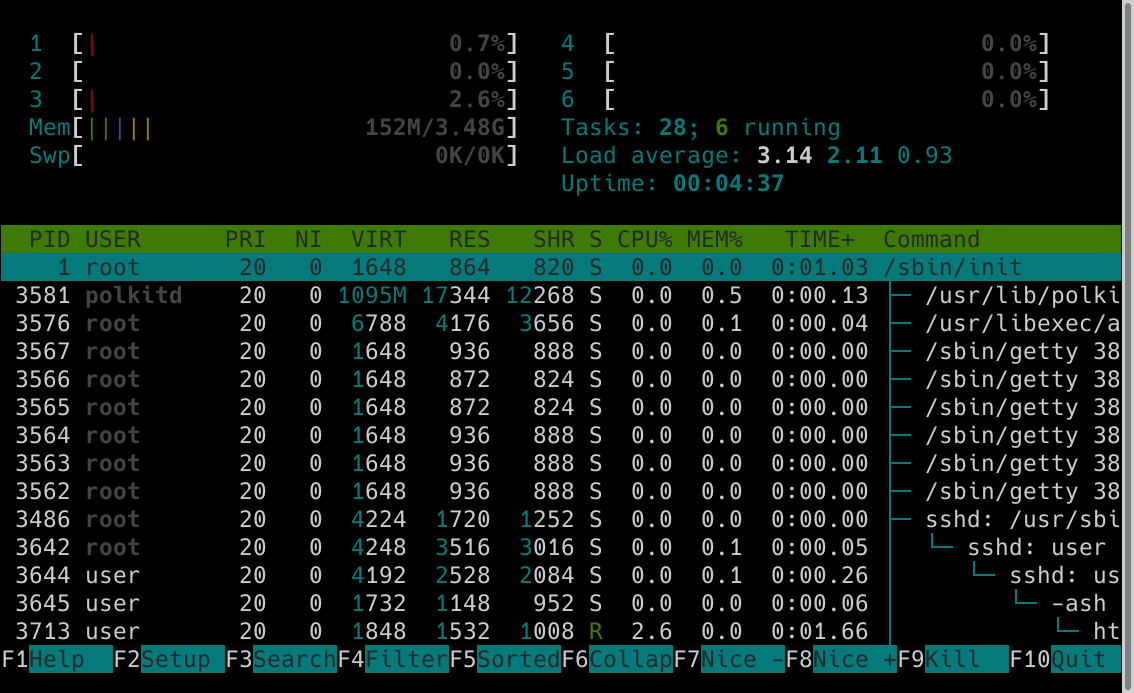

Monitoring the modem - qnd_cbd.py

After finishing the boot procedure, cbd goes into a loop apparently

waiting for administrative messages from the modem. It does this by

opening /dev/umts_boot0 again and reading from it. My script

does the same.

I don’t know what cbd does with the results: I’ve yet to see any

information come out of the modem this way.

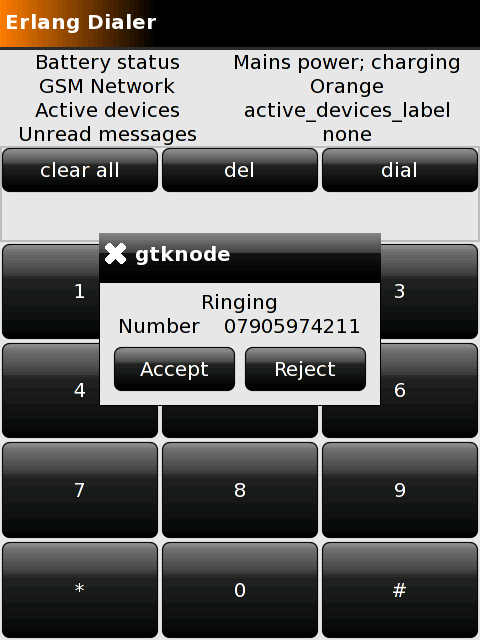

Interacting with the modem - qnd_ril.py

To interact with the modem, open /dev/umts_ipc0 and

/dev/umts_rfs0. IPC stands for the usual Inter-Process

Communication, but RFS apparently (?) stands for Remote File System.

Most modem interaction happens over the IPC channel. I don’t really know what the RFS channel is for yet.

Each packet sent to or from /dev/umts_ipc0 is formatted as a

struct sipc_fmt_hdr

that includes its own length, making parsing easy. Simply read and

write a series of sipc_fmt_hdrs (with appropriate body bytes tacked

on after each) from and to /dev/umts_ipc0.

Decoding them is another matter entirely! The libsamsung-ril

library does this well. However, a little bit more information can be

gleaned by matching bytes sent and received by rild to the

diagnostic outputs it produces. Here’s a lightly-reformatted snippet

of straced output of Samsung’s rild:

[pid 3969] 13:25:51.716591

read(18</dev/umts_ipc0>,

"\x0b\x00\xd3\x00\x02\x02\x03\x00\x01\x00\x01",

264192) = 11 <0.000448>

[pid 3969] 13:25:51.718454

writev(3<socket:[2555]>,

[

{"\x01\x81\x0f?jW_\x12\xc0\xc7*", 11},

{"\x06", 1},

{"RILD\x00", 5},

{"[G] RX: (M)CALL_CMD (S)CALL_INCOMING (T)NOTI l:b m:d3 a:00 [ 00 01 00 01 ]\x00", 75}

],

4) = 92 <0.000528>

Analysing the first seven bytes (the sipc_fmt_hdr) send to fd 18, we

see len=11, msg_seq=0xd3, ack_seq=0, main_cmd=2, sub_cmd=2,

cmd_type=3.

From the log message it prints, we can deduce that CALL_CMD=2, CALL_INCOMING=2, and NOTI=3. This lines up well with the definitions in libsamsung-ril, and it turns out that by observing the modem in operation you can learn a few more definitions not included in libsamsung-ril.

Next steps

Perhaps a good next step would be to translate this knowledge into support for the Galaxy S7 in libsamsung-ipc; for now, I don’t need that for myself, but it’s probably the Right Thing To Do. I’ll see if they’re interested in taking such a contribution.

-

A handful of open-source projects, notably libsamsung-ipc and libsamsung-ril, plus the relevant kernel source code, are the only real documentation available! ↩

-

Here’s the table of contents from my modem’s firmware partition:

00000000: 544f 4300 0000 0000 0000 0000 0000 0000 TOC............. ----------------------------- --------- name offset 00000010: 0000 0000 0002 0000 0000 0000 0100 0000 ................ --------- --------- --------- --------- loadadr size crc count/entryid 00000020: 424f 4f54 0000 0000 0000 0000 0002 0000 BOOT............ 00000030: 0000 0040 6425 0000 0888 f1a2 0000 0000 ...@d%.......... 00000040: 4d41 494e 0000 0000 0000 0000 8027 0000 MAIN.........'.. 00000050: 0000 0140 6cc4 6202 51b1 c353 0200 0000 ...@l.b.Q..S.... 00000060: 4e56 0000 0000 0000 0000 0000 0000 0000 NV.............. 00000070: 0000 ee47 0000 1000 0000 0000 0300 0000 ...G............ 00000080: 4f46 4653 4554 0000 0000 0000 00aa 0700 OFFSET.......... 00000090: 0000 0000 0056 0800 0000 0000 0400 0000 .....V.......... -

Actually the information about “insecure” and “secure”

IOCTL_SECURITY_REQcomes from a dmesg trace uploaded by someone anonymous to a pastebin I cannot find again. My own cbd doesn’t seem to produce these diagnostics. Sorry. ↩